Research Areas

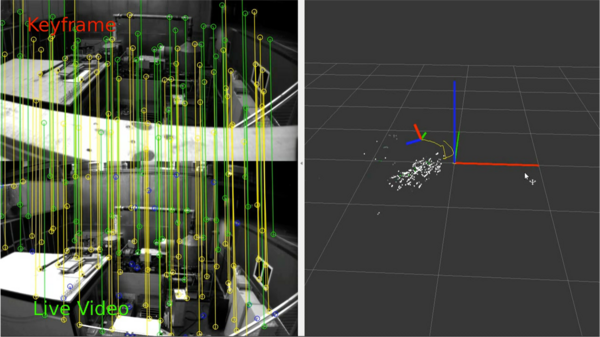

Multi-Sensor Slam

Integration of complementary sensing modalities has been a popular choice enabling highly robust and accurate robot state estimation and mapping. In particular, Inertial Measurement Units (IMUs) have become smaller and cheaper and are present in most of today's mobile devices. Various use-cases combining an IMU with GPS, odometry, magnetic compasses and also visual correspondences have been suggested and will continue to be researched upon, forming the backbone of autonomous mobile robot deployment. [+]

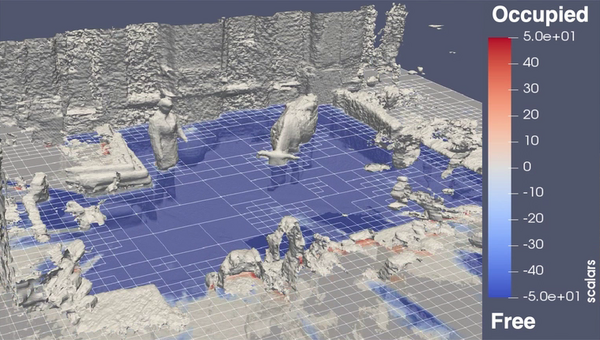

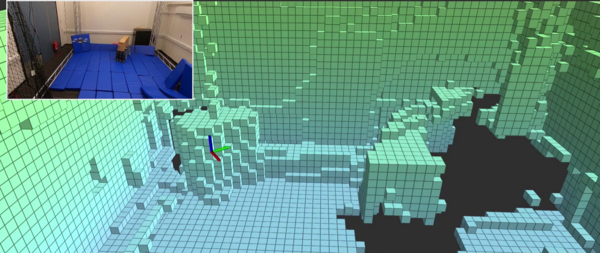

(Dense) Map Representations

Building scalable and high-quality maps along with the possibility to localise in them is a primary need for truly autonomous robots, if we want them to achieve complex tasks. Recently, the use of cameras (including colour and depth cameras, such as the Microsoft Kinect) to this end has become an increasingly popular choice, due to the richness of information about the environment that is present in the images. Simultaneous Localisation And Mapping (SLAM) from imagery is an ongoing challenge, in particular when it comes to scalability in space and time, along with higher spatial map density and higher accuracy, while maintaining real-time operation. And importantly, the map representations should intrinsically support efficient navigation (e.g. motion planning), thus much recent work was devoted to volumetric occupancy mapping that supports fast free-space queries. [+]

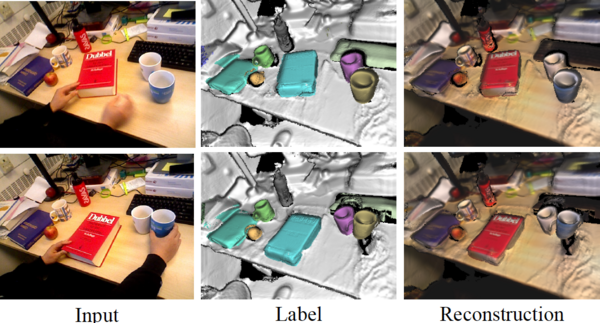

Semantic, Object-level and Dynamic SLAM

For meaningful interaction of a mobile robot with its environment, as well as with human operators, availability of accurate pose and dense maps will not be sufficient: instead, we need semantic information and a segmentation into 3-dimensional objects and hierarchies of objects – importantly, concepts that are shared with humans. This deeper understanding allows a robot to operate safely relating to environment and tasks. Moreover, the motion of individual objects or a person performing a task, need to be well understood by a robot in order to infer what is happening and to forecast what might happen in the future. [+]

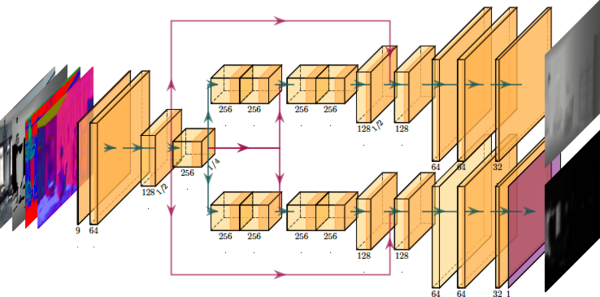

Machine Learning (Including Deep Learning)

Machine learning, often Deep Learning, may be used throughout localisation, mapping, navigation and control; the field is therefore being researched actively, along with methods to best leverage these techniques in a mobile robotics context. This includes questions around representations for the environment, learning uncertainty, online learning, and how to best combine learned models with sensor measurements. [+]

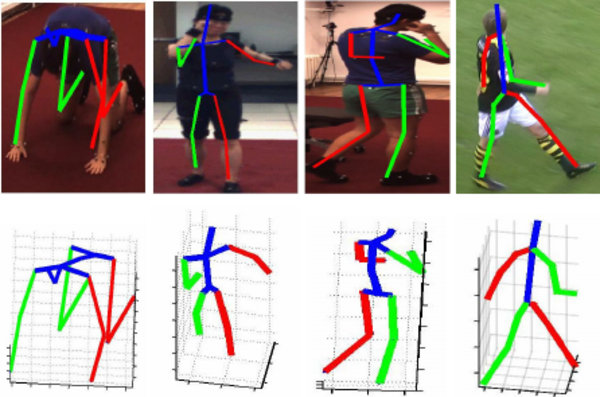

People Tracking & Human-Robot Interaction

For a safe and efficient interactions between mobile robots and human, it is key for the robot to understand the human's behavior, ranging from the human's 3D body pose to its high-level actions. This research area includes questions about good learning representations for human modeling, robustifying model predictions despite the large variety of human shape and multi-modality of human actions, or leveraging contextual information for accurate predictions. [+]

Robot Navigation

The research on robot navigation is focused on leveraging the above SLAM techniques at at an appropriate level, in order to support planning and execution of safe actions. This may include, importantly, the case of exploring an unknown environment (i.e. only moving into observed free space). Or it may require semantic and dynamic understanding, including motion forecasting of e.g. people, in order to safely move around without colliding. [+]

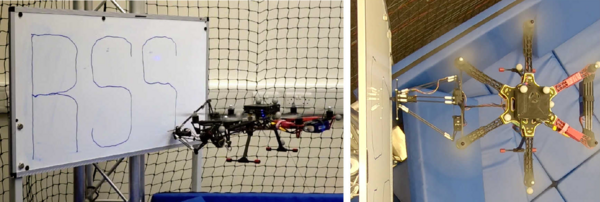

Physical Interaction

Beyond safe navigation, mobile robots of tomorrow may want to interact physically with their environments, in order to complete ever more complex tasks. Examples are robots accomplishing grasping in a warehouse automation or domestic setting (pick-and-place over long distances). As another example, mobile robots may be deployed in a construction scenario, where drones could accomplish e.g. painting and drilling, or where ground-based robots might be assembling a structure. All of these applications crucially depend on meaningful geometric and semantic understanding of their surroundings and further extend the safe navigation stack. [+]

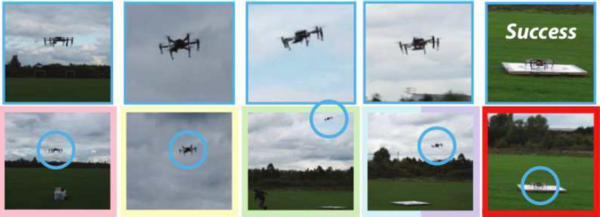

Drones

Drones serve as an ideal test and demonstration platform to showcase the above capabilities, from SLAM to control, navigation, and manipulation. Published algorithms have been run on-board Unmanned Aerial Systems (UAS), both on multicopter platforms as well as on solar aeroplanes. Robustness, accuracy, as well as real-time capabilities under computational constraints pose main challenges in this respect, as we do only want to rely on on-board computation for safety reasons, since communication links may break. [+]