News

01/10/2025: Anran Zhang joining MRL@TUM

Welcome, Anran, who is starting as a PhD student at the lab!

19/07/2024: RSS`24 Workshop

We held a workshop, "Navigation & Mobile Manipulation in Challenging and Cluttered Natural Environments" in Delft, Netherlands during RSS 2024. As a part of the EU-funded DigiForest project, the workshop topic includes robots in natural environments and their applications to environmental monitoring, forestry, and precision agriculture.

We thank you for our speakers and attendees. For information: https://nature-bots.github.io.

13/05/2024: 4 Papers at ICRA 2024

We will be presenting 4 papers at ICRA 2024:

- Anthropomorphic Grasping With Neural Object Shape Completion We introduce a novel approach harnessing object-centric spatial scene understanding to enhance anthropomorphic manipulation. Our framework accurately determines object pose and full geometry from partial observations, utilizing neural completion and local shape confidence. This greatly improves robotic grasping and manipulation skills of previously unseen objects. Video Paper - 16:30-18:00, Paper TuCT27-NT.6

- FuncGrasp: Learning Object-Centric Neural Grasp Functions from Single Annotated Example Object We present a framework that can infer dense yet reliable grasp configurations for unseen objects. Unlike previous works that only transfer a set of grasp poses, our approach is able to transfer configurations as an object-centric continuous grasp function across varying instances. Our framework significantly improves grasping reliability over baselines. Video Paper - 10:30-12:00, Paper TuAT27-NT.7

- Control-Barrier-Aided Teleoperation with Visual-Inertial SLAM for Safe MAV Navigation in Complex Environments We propose a way to intuitively, easily, and safely teleoperate drones in entirely unknown environments by closing the loop between control, VI-SLAM, and 3D mapping using exclusively on-board computation. Video Paper - 16:30-18:00, Paper ThCT23-NT.1

- Tightly-Coupled LiDAR-Visual-Inertial SLAM and Large-Scale Volumetric Occupancy Mapping We present a fully tightly-coupled LiDAR-Visual-Inertial SLAM system and 3D mapping framework applying local submapping strategies to achieve scalability to large-scale environments. A novel and correspondence-free, inherently probabilistic, formulation of LiDAR residuals is introduced, expressed only in terms of the occupancy fields and its respective gradients. These residuals can be added to a factor graph optimisation problem, either as frame-to-map factors for the live estimates or as map-to-map factors aligning the submaps with respect to one another. Paper Video - 16:30-18:00, Paper ThCT26-NT.2

01/04/2024: Yannick Burkhardt joining SRL@TUM

Welcome, Yannick, who is starting as a PhD student at the lab!

New EU Project - AUTOASSESS

We are joining forces with other university labs and industry partners to leverage AI and robotics to perform vessel inspections, removing human surveyors from dangerous and dirty confined areas. For more information: https://autoassess.eu/

22/03/2024: Lab Retreat

Greetings from our lab trip in Garmisch! We had interesting discussions about the future of robotics and research ethics and were porting our stack to ROS2.

31/01/2024: 3 ICRA Papers accepted

We are happy to announce that 3 papers have been accepted to ICRA 2024.

- Tightly-Coupled LiDAR-Visual-Inertial SLAM and Large-Scale Volumetric Occupancy Mapping

- Control-Barrier-Aided Teleoperation with Visual-Inertial SLAM for Safe MAV Navigation in Complex Environments

- FuncGrasp: Learning Object-Centric Neural Grasp Functions from Single Annotated Example Object

For further information, please see our publication pages: https://srl.cit.tum.de/publications.

30/10/2023: RA-L Paper accepted

In our RA-L'23 work "Anthropomorphic Grasping with Neural Object Shape Completion", we leverage human-like object understanding by reconstructing and completing their full geometry from partial observations and manipulating them using a 7-DoF anthropomorphic robot hand.

23/10/2023: Jiaxin Wei joining SRL@TUM

Welcome, Jiaxin, who is starting as a PhD student at the lab!

04/09/2023: Jaehyung Jung joining SRL@TUM

Welcome, Jaehyung, who is starting as a PostDoc at the lab!

30/06/2023: 3 IROS Papers accepted

We are happy to announce that 3 papers have been accepted to IROS 2023.

- BodySLAM++: Fast and Tightly-Coupled Visual-Inertial Camera and Human Motion Tracking

- GloPro: Globally-Consistent Uncertainty-Aware 3D Human Pose Estimation & Tracking in the Wild

- Accurate and Interactive Visual-Inertial Sensor Calibration with Next-Best-View and Next-Best-Trajectory Suggestion

For further information, please see our publication pages: https://srl.cit.tum.de/publications.

25/05/2023: RA-L Paper accepted

In our RA-L'23 work "Incremental Dense Reconstruction from Monocular Video with Guided Sparse Feature Volume Fusion" by Xingxing Zuo, Nan Yang, Nathaniel Merrill, Binbin Xu, and myself, we propose a deep feature volume-based dense reconstruction method that predicts TSDF (Truncated Signed Distance Function) values from a novel sparsified deep feature volume in real-time. An uncertainty-aware multi-view stereo (MVS) network is leveraged to estimate the physical surface locations in feature volume.

Video: https://youtu.be/bY6zffvbSGE

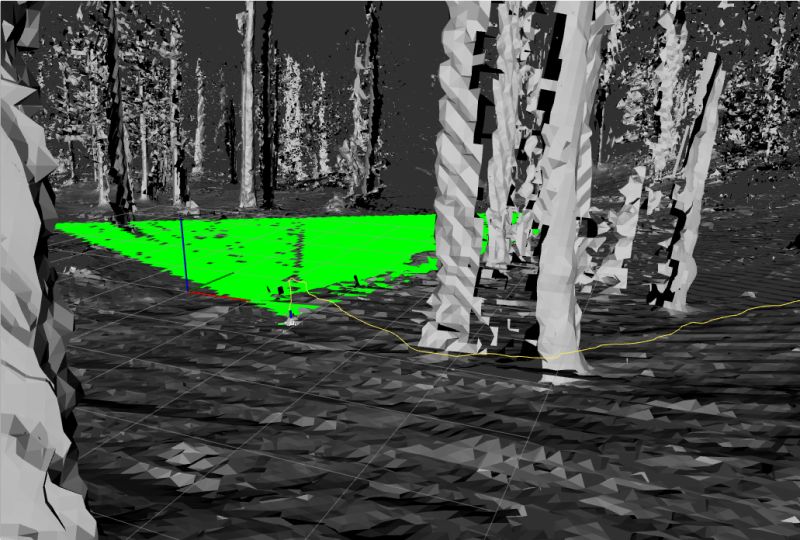

05/2023: Field Experiments in Finland

After quite a while since the last field trip, SRL went to Evo (Finland) with the DigiForest EU project team to test our autonomous drone flight stack including VI-SLAM and realtime dense mapping in the loop. Lots of fun! But also of course a lot of work.

29/03/2023: Code Release for MID-Fusion, Deep Probabilistic Feature Tracking and VIMID

We are super excited to announce that we have just released our code on object-level SLAM with MID-Fusion, Deep Probabilistic Feature Tracking and VIMID on GitHub!

Object-level SLAM aims to build a rich and accurate map of the dynamic environment by segmenting, tracking and reconstructing individual objects. It has many applications in robotics, augmented reality, autonomous driving and more.

We hope that our code will be useful for interested researchers and practitioners and welcome any feedback, questions or suggestions!

05/03/2023: ICRA paper accepted

In our ICRA'23 work “Finding Things in the Unknown: Semantic Object-Centric Exploration with an MAV” by Sotiris Papatheodorou, Nils Funk, Dimos Tzoumanikas, Christopher Choi, Binbin Xu, and myself, we broaden the scope of autonomous exploration beyond just uncovering free space: we study the task of both finding specific objects in unknown space and reconstructing them to a target level of detail while exploring an unknown environment.

12/02/2023: Lab Retreat

Greetings from our lab trip in Austria! We had interesting discussions about the future of robotics and artificial intelligence, research ethics, and many more.

09/2022: New EU Project - DigiForest

Together with several academic and industry partners, we are developing tools for digital analytics and robotics for sustainable forestry. For more information: https://digiforest.eu/

15/12/2022: Sebastián Barbas Laina joining SRL@TUM

Welcome Sebastián, who is starting as a PhD student at the lab!

14/11/2022: Hanzhi Chen joining SRL@TUM

Welcome Hanzhi, who is starting as a PhD student at the lab!

02/08/2022: 3 IROS paper accepted

We are happy to announce that we will present several works at IROS 2022:

- Our recent work “Visual-Inertial SLAM with Tightly-Coupled Dropout-Tolerant GPS Fusion” by Simon Boche, Xingxing Zuo, Simon Schaefer and myself has been accepted to #IROS2022! It builds upon the realtime Visual-Inertial (VI) SLAM framework of OKVIS2 and fuses GPS measurements in a tightly-coupled approach in an underlying factor graph. We unify support for initialisation into the global coordinate frame initially or after first performing VI-SLAM only, as well as re-initialisation and alignment after potentially long GPS dropouts with respective VIO/VI-SLAM drift. Simultaneously, the framework supports VI-SLAM loop-closures at any time (whether GPS is available or not). For more information, please visit the project webpage!

- Our recent work "Visual-Inertial Multi-Instance Dynamic SLAM with Object-level Relocalisation" by Yifei Ren*, Binbin Xu*, Christopher L. Choi and myself has been accepted to IROS 2022! It builds upon our previous work MID-Fusion with integration of IMU information. Even in extremely dynamic scenes, it can robustly optimize for the camera pose, velocity, IMU biases and build a dense 3D reconstruction object-level map of the environment. In addition, when an object is lost or moved outside the camera view, our system can reliably recover its pose utilising object relocalisation. For more information, please visit the project webpage!

- Our recent work "Learning to Complete Object Shapes for Object-level Mapping in Dynamic Scenes" by Binbin Xu, Andrew J. Davison, and myself has been accepted to IROS 2022! We proposed a new object map representation that can preserve the details that have been observed in the past and simultaneously complete the missing geometry information. It stands in the middle between reconstructing object geometry from scratch and mapping using object shape priors. We achieve this by conditioning it on integrated volumetric volume and a category-level shape prior. The results show that completed object geometry leads to better object reconstruction and also better tracking accuracy. For more information, please visit the project webpage!

25/07/2022: ECCV paper accepted

Our recent work “BodySLAM: Joint Camera Localisation, Mapping, and Human Motion Tracking” was accepted to #ECCV2022! We show that a joint optimisation of camera trajectories and human position, shape, and posture improves the respective estimates when compared to estimating these separately. Furthermore, we demonstrate that we can recover accurate scale from monocular videos using our proposed human motion model. For more information, please visit the project webpage!

22/06/2022: High Schools @ SRL

Today, we have had the chance to introduce students from all over Baveria to the fascinating world of robotics, as one of five research labs at TUM. Thanks to the Baverian Academy of Sciences and Humanities for the organization!

01/05/2022: Several open positions

SRL is currently considering PhD and postdoc applicants with background in SLAM and robot/drone navigation for the following projects:

- Digital Forestry (EU Project)

- Spatial AI for Cooperative Construction Robotics

- Multimodal Perception

If interested, please apply through the Chair's portal and mention your interest in any of these.

01/02/2021: Smart Robotics Lab moving to TUM

Per 1st February 2021, the Smart Robotics lab starts its move / re-start at TUM. Excited to continue our mobile robotics research!